Tech mistake |To address parents’ concerns over inappropriate content on YouTube being seen by children, Google today is announcing an expanded series of parental controls for its YouTube Kids application. The new features will allow parents to lock down the YouTube Kids app so it only displays those channels* that have been reviewed by humans, not just algorithms. And this includes both the content displayed within the app itself, as well as the recommended videos. A later update will allow parents to configure which videos and channels, specifically, can be viewed.

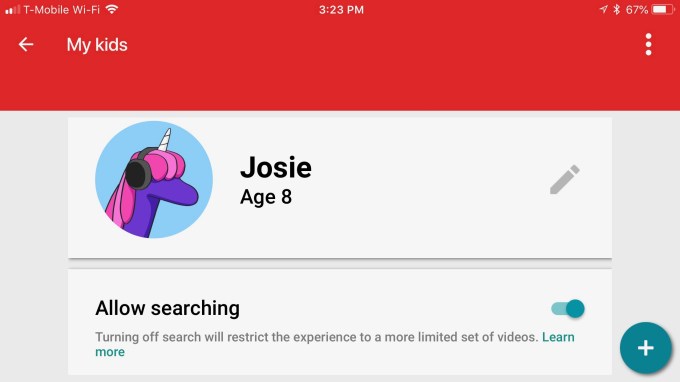

The controls will be opt-in — meaning parents will have to explicitly turn on the various settings within each child’s profile in YouTube Kids’ settings.

Launched less than three years ago, YouTube Kids has been a breakout hit — at least in terms of usage metrics. More than 11 million children launch the app weekly, and more than 70 billion videos have been viewed in the app to date. However, from a public relations standpoint, the app has been a nightmare — inappropriate, and sometimes horrific, videos have slipped past the algorithms, leading to outrage.

The issue has to do with how the YouTube Kids app works. First, videos are uploaded to YouTube’s main site.

They’re then filtered using machine learning techniques through a series of algorithms that determine if they should be added to YouTube Kids’ catalog.

But algorithms are not people, and they make mistakes. To fill in the gaps in this imperfect system, YouTube Kids relied on parents to flag suspect videos for review.

YouTube employs a dedicated team of reviewers for YouTube Kids, but it doesn’t say how many people are tasked with this job. (That’s a bit concerning, as it could mean it’s fewer than we’d like to see.)

This system, parents have felt for some time, just wasn’t good enough.

But instead of turning YouTube Kids into a hand-curated collection of “safe” content, the company has steadily added more controls to limit kids’ access to videos, in response to parents’ concerns.

For instance, it added a setting to disable search. But even with this on, kids would be recommended videos that only an algorithm had “reviewed.”

And, as any parent will tell you, even one bad video is one too many.

A single viewing of a scary video can lead to weeks of nightmares; and videos with bad language or mature subject matter are just as awful, from a parent’s perspective.

One high-profile example of the horrors on YouTube were those videos that took a child’s favorite characters and showed them in violent situations — like “Peppa the Pig” drinking bleach or eating her father. These are offensive not just to parents, but to many people who don’t think cruel parodies are funny. And yet they kept getting uploaded to YouTube, where they’ve confused its algorithms, much to parents’ disgust and dread.

Even beyond these extreme examples, some parents are uncomfortable with the nature of many YouTube videos themselves. We’ve found our kids watching barely disguised commercials when they’re too young to know the difference between product placements and content. We cringe as prepubescent YouTube stars sass their moms and dads and whine about doing homework. We’re sick of YouTube dictating house-ruining trends like the DIY slime craze. And sometimes, we just can’t stand to hear Jojo Siwa sing.

The updated version of YouTube Kids will let parents turn all that crap off.

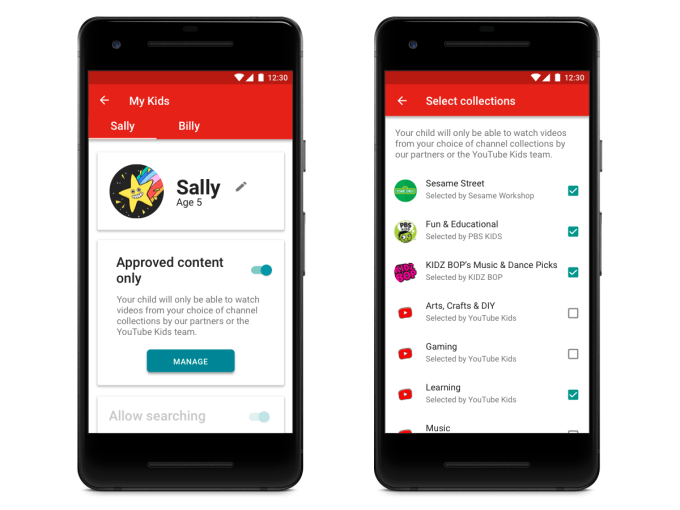

Now, parents will be able to toggle on a new setting for “Approved content only,” which also disables search.

This is different from how disabling search used to work. Before, this removed the search box from the app, but any video from YouTube Kids’ larger catalog could still appear in recommendations. Going forward, when parents choose to turn off search, they’re also limiting recommendations to human-approved content as well.

At last.

What took you so long, YouTube?

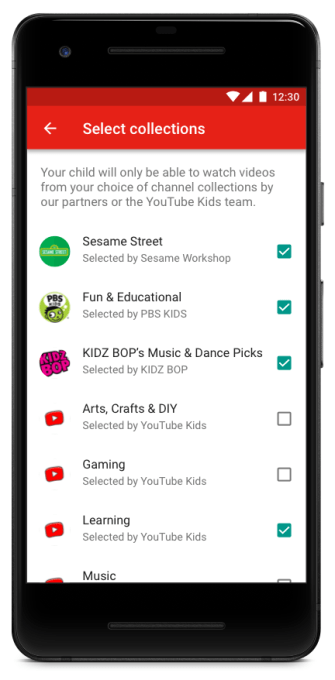

Meanwhile, within the new “Approved Content Only” section, parents can drill down even further to pick what human-reviewed content gets shown. They can choose from collections of videos built by YouTube and trusted partners, including Sesame Workshop, PBS Kids, and Kidz Bop. The collections span categories like “arts, crafts & DIY,” “gaming,” “learning,” “music” and more.

By default, none of these collections are selected, so parents have to make explicit choices about what kids can watch.

A later version of YouTube Kids will go even further — allowing parents to select individual videos or channels they approve of, for a truly handpicked selection.

“Over the last three years, we’ve worked hard to create a YouTube Kids experience that allows kids to access videos that are enriching, engaging, and allows them to explore their endless interests,” says Malik Ducard, Global Head of Family and Learning Content for YouTube.

“Along the way, we’ve never stopped listening to feedback and we’re continuing to improve the app.

In addition to all the work. Our teams are doing behind the scenes to make the experience the best it can be. We’re also offering parents even more options to make the right choice for their family. And each child within their family,” he adds.

Parents should probably just enable all the settings to narrow. YouTube Kids down to only human-reviewed selections as soon as the settings option appears. (Just be prepared for the whining and begging that will result when you turn off access to favorite videos and annoying channels. My suggestion? Threaten to delete the app entirely.)

Kids never should have had unfettered access to YouTube. Or even semi-filtered access like the YouTube Kids catalog in the first place. It should have begun as a human-reviewed catalog by default. Then slowly added options to expand access over time, including the manual whitelisting of channels.

After all, this isn’t Netflix Kids over here — it’s the weird, unpredictable, and sometimes scary internet… in video format.

The new features in YouTube Kids will roll out over the course of the year. The company says, with everything but the explicit whitelisting option arriving this week.

* Post clarified to explain the human reviewers will review “channels;” before we wrote they would review “videos.” YouTube reached out to notate the difference. It says a “channel review” includes “.A human confirmation of many videos on the channel. Once the channel has been deemed appropriate and family-friendly. It becomes approved. Future videos from the channel then go through algorithmic layers of security.

The article was originally published here.